What will our workspace look like in 2020?

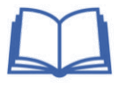

Is your next workspace going to be 3D? Not just in your employer offering you a stand-up desk, but will real 3 dimensional display technology ever become mainstream? The latest incarnation of 3D displays appears to use holographic technology such as, a pending ‘Hydrogen’ smartphone that is advertised on www.red.com as ‘The world’s first holographic media machine… No glasses needed.’.

The industry has been experimenting with 3D displays since the early days of computer graphics. At the 1981 ACM SIGGRAPH convention in Dallas 14,000 visitors were treated not only to the introduction of Donkey Kong, but could also peer into what looked like a 40 gallon drum lined with mirrors, and featured a wireframe space shuttle floating in its inner space. Throughout the 1990’s and 2000’s sub-surface geoscientists and engineers have hung out in corporate showcase 3D ‘caves’ hoping to get a more hands-on impression of their territory. Now, we have VR headsets. If you’ve been there, would you like to make the 3D environment your preferred base?

At this stage, it still seems as though 3D displays will continue to cycle through an ongoing cycle of innovative technologies that end up being sidetracked because of problems in logically connecting to the analytical brain, wearability, or processing speed and software. Because of the quite different way in which the mind interacts with a true 3D display, as opposed to a 3D projection on a 2D display, and the difficulty in locking down what a control panel should look like, current 3D visualization applications aren’t automatically optimized for feeding 3D displays.

Touch and Go

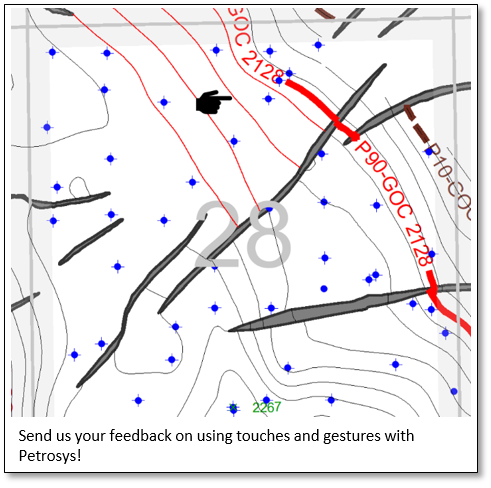

How will you be controlling what your information device does for you? When the mouse was introduced, a lot of people thought that it was a graphics gimmick that would never find a use in word processing. Light pens and later touch screens have now been with us for a long time, but we are only just starting to get comfortable with the idea of pointing and stroking on our displays. In the past, touch displays have lost favor because of the pain associated with holding up your hand unsupported for a long time, erratic performance, and the physical deterioration of displays under constant tactile attack. However, pointing, dragging, pinching and other gestures do seem to have established themselves in the way that we expect to drive our applications. Petrosys doesn’t have explicit support for touch controls, as yet, but standard Windows touch functionality can create interesting workflows.

How will you be controlling what your information device does for you? When the mouse was introduced, a lot of people thought that it was a graphics gimmick that would never find a use in word processing. Light pens and later touch screens have now been with us for a long time, but we are only just starting to get comfortable with the idea of pointing and stroking on our displays. In the past, touch displays have lost favor because of the pain associated with holding up your hand unsupported for a long time, erratic performance, and the physical deterioration of displays under constant tactile attack. However, pointing, dragging, pinching and other gestures do seem to have established themselves in the way that we expect to drive our applications. Petrosys doesn’t have explicit support for touch controls, as yet, but standard Windows touch functionality can create interesting workflows.

Experimenting with Windows touch in Petrosys mapping recently, one of our support people got quite comfortable with tapping and dragging objects, but interestingly came unstuck when they applied their MAC based habits, such as a two finger tap to bring up context sensitive menus. On my previous touchscreen laptop (I reverted to a non-touch model because my touch screen became fiendishly uncooperative) the most intuitive gesture that I missed from my smartphone habits was the ability to use pinch gestures to zoom on the Petrosys map.

Talk to Me

Will your workspace be listening to you? Early interactive graphics programs used typed pseudo natural language controls such as ‘Raise surface by 208 meters’ simply because the systems didn’t support menus and icons. Now that we have speech recognition and natural language interpretation readily available – albeit often with Greta Google or Sammy Samsung listening in – and with menus and icons becoming ever more complex and overcrowded, it’s a good time to re-consider natural language controls driven through voice recognition. Of course, if your workstation is running a dozen different applications at the same time – unlike the usually single window on your phone – your master listener will need some real intelligence to work out which application you are muttering to. If natural language conversational controls were more widely used then the next logical step would be for your master listening process to start thinking about your workflow, perhaps even suggesting improvements…

Choosing between touch/gesture, menu/icon, and natural language controls can have an interesting side effect on the way that applications deliver workflow standardization on the one hand and creative knowledge analysis on the other. The relatively limited controls accessible through touch and gesture encourage applications to offer well defined paths through a workflow, making a process easy to repeat once it’s been discovered. The menus and icons used in our current applications offer concurrent access to a much broader range of options, allowing implementation of more diverse workflows based on an exploration of the menu system. A natural language interface can offer access to an unlimited range of options and processing paths, albeit without the promotional impact of visual cues.

For 2018, don’t expect to have to unlearn the menus and icons that you’ve become familiar with in Petrosys PRO, although we are looking forward to users reaping the benefits of our new map templates. Petrosys has a good track record of upgrading its user interfaces to meet contemporary expectations, and we would like to hear your ideas on how you’d like to be asking our systems to create maps and analyze data as we move towards 2020.

Get in touch

If you would like to know more about Petrosys PRO contact our team of expert Mapping Gurus.